Last week, the White House announced a significant deal with several artificial intelligence companies, aimed at managing the risks posed by A.I. technology. While the agreement called for safe, secure, and transparent A.I. development, artists and creatives have expressed disappointment, stating that the deal does little to protect their interests. Job loss and intellectual property theft are among the challenges artists fear in the age of A.I., and they argue that the administration’s deal does not adequately address these concerns.

Artists’ Dissatisfaction with the A.I. Deal

The voluntary commitments made by A.I. giants such as Amazon, Google, Microsoft, and OpenAI may be well-intentioned, but artists argue that they are insufficient. The deal focuses on ensuring A.I. products are safe and free from biosecurity or cybersecurity risks, as well as addressing societal risks like bias and discrimination. However, it fails to acknowledge the mass act of theft that A.I. generators are built upon and offers no concrete solutions for artists’ concerns.

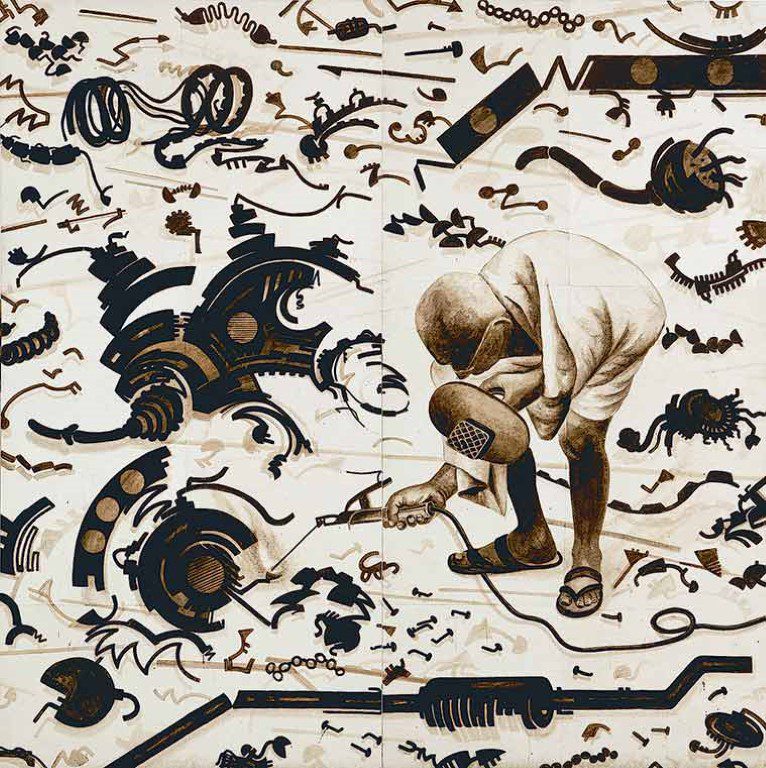

Tools Developed by Artists to Protect Against A.I. Misuse

In response to the lack of protection in the White House’s deal, artists Mathew Dryhurst and Holly Herndon have taken matters into their own hands. They developed tools like Spawning, allowing artists to set permissions on how their style and likeness can be used, and HaveIBeenTrained, enabling artists to identify if their work was used to train popular A.I. art models. Despite these efforts, artists like Molly Crabapple argue that without comprehensive regulations, only the most elite illustrators will survive the changes brought on by A.I. in the creative industry.

Legal Battles Emerge as Artists Seek Justice

The discontent among artists has led to legal actions against A.I. companies. Comedian and actress Sarah Silverman, along with authors Christopher Golden and Richard Kadrey, filed a lawsuit against OpenAI and Meta, accusing the companies of violating their copyrights by using their work to train A.I. models without permission, credit, or compensation. Matthew Butterick, the lawyer representing Silverman, emphasizes the need for dataset transparency to ensure fairness and ethics for all creators.

Professors and Experts Warn of Ongoing Risks

Professor Ben Zhao and his research team developed Glaze, a technology that allows artists to protect their style from A.I. platforms. Zhao acknowledges the good intentions of the Biden administration but points out their lack of awareness regarding real risks, including content misappropriation without consent or compensation. He argues that voluntary commitments are meaningless and calls for transparent regulation with defined goals and consequences for non-compliance.

Demands for Greater Protections

Artists and stakeholders in the art world propose various measures to protect creatives from A.I. misuse. Crabapple suggests “algorithmic disgorgement,” the destruction of algorithms trained on copyrighted work, as a penalty for violating copyrights. Additionally, they call for future A.I. models to be trained only on consensually obtained work and penalties for companies engaging in copyright theft. Dryhurst highlights the European Union’s laws on A.I. and data mining, emphasizing the importance of a standardized opt-out mechanism for creatives.

Conclusion

While the White House’s deal with A.I. corporations aimed to manage the risks associated with A.I. technology, artists and creatives argue that it falls short in protecting their interests. Job loss and intellectual property theft remain significant concerns, and voluntary commitments are seen as inadequate. Artists have taken matters into their own hands, developing tools to safeguard their work, but they emphasize the need for comprehensive regulation and stronger policy stances from the government to ensure fair treatment and protection in the age of A.I.

Contributor